No facial recognition? No problem! How London Bridge tested tech to track individuals inside the station

AI cameras can use this one weird trick to sidestep privacy restrictions to follow people around

Sometimes we invent a new technology, and it is immediately obvious how it could be misused in scary ways.

Facial recognition is an obvious example of this. The ability to automatically identify suspected criminals in public places could obviously make us safer – but it also ratchets us closer to living in an Orwellian surveillance society, where our every move is tracked by the authorities. We only have to glance at the nightmare in Xinjiang to understand why that might be a bad idea.

So how to balance privacy and security is a difficult question. And in Britain in 2024, this isn’t just a hypothetical exercise.

Earlier this year, I reported on how Transport for London had (TfL) been trialling AI “smart station” technology at Willesden Green station, and how Network Rail is using the same technology to watch over Liverpool Street.

In both cases, this “video analytics” technology basically took existing CCTV cameras and made them smart, able to identify people, behaviour, objects and incidents in the stations.

It meant that with AI casting its watchful eyes, station staff could conceivably receive real time alerts for everything from serious incidents, like someone brandishing a weapon, or collapsing on a platform, to more normal problems, like crowding on an escalator or discarded litter on a platform.

As I concluded at the time, from a station management perspective, it really is absolute no-brainer to use these technologies. In an instant, AI can turn a dumb CCTV network into a pro-active station manager, and staff can be more effectively deployed, keeping passengers safer, and ensuring the smoother running of operations.

However, in both cases there was a clear line that neither TfL or Network Rail would cross. Both declined to use ‘biometric’ facial recognition to identify or track the people inside their stations.

It was probably a sensible move. Tracking people using their face is legal in the UK – but only in very strict circumstances. The 2018 Data Protection Act defines facial data as “special category data” – meaning there is a very high legal bar on using it.

And this is where today’s story really begins.

Imagine you’re Network Rail. It’s obvious why tracking individuals would be a good idea, for reasons of not just national security and crime prevention, but also to improve how stations are managed. But to use facial recognition would be a mountain of legal paperwork, not to mention a potential PR nightmare. So what can you do?

Today, thanks once again to the Freedom of Information Act, I can reveal the clever workaround that Network Rail has tested at London Bridge station – as well as some of the other insanely clever things that the station may be currently using AI cameras to do.

If you enjoy nerdy politics, policy, tech and infrastructure stuff, you will enjoy subscribing (for free!) to my newsletter to get more of This Sort Of Thing directly in your inbox.

Keep up appearances

The trial began in August 2020. London Bridge, the fourth busiest station in the UK, was selected as the testbed for a video analytics system provided by a company called Ipsotek, which is a subsidiary of Atos.

Officially the £2.442m trial, which was co-funded by InnovateUK, ran for a full year – and Network Rail has since confirmed to me that following the test, the software is today in routine use at the station, and that it is being used on 100 of the approximately 750 CCTV cameras at the station1.

It has however, declined to confirm or deny which specific analytics features, like the ones described above, are today in use at London Bridge. But with the documents I’ve obtained, I can confirm some of the capabilities that were tested. And I’ve got plenty more on other use cases later in this piece.

But let’s cut to the chase – how can AI cameras follow individuals without tracking our faces?

The answer is that they use what Network Rail and Ipsotek call our ‘appearance’ – other information about us that may not specifically identify us as individuals.

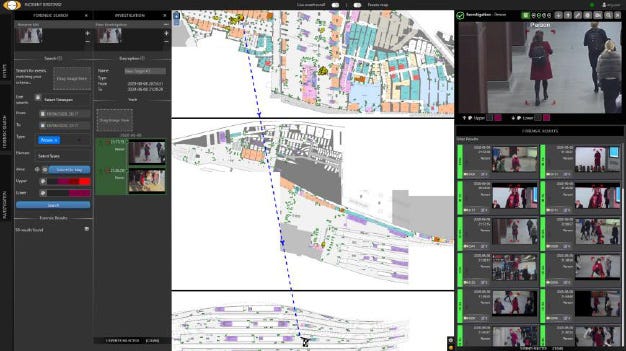

Ipsotek calls this feature “Tag and Track”. The way it works is that it looks at the non-facial characteristics of the people captured on CCTV, such as what they’re what they’re wearing, what they’re carrying and where they’re walking.

Individually these characteristics may not be unique like a face is. There is probably more than one person in the station wearing a red coat. But how many others are also wearing a black backpack? How many are women? Suddenly, if you’re searching for one specific person, it significantly narrows down your search.

And because the camera positions and the layout of the station are fixed, and because we haven’t invented teleportation yet, anyone inside the station is going to be moving relatively predictably as they pass between cameras.

So given this, you can start to see how it becomes relatively trivial to piece together the movements of any given individual2.

For example, say the station staff were asked to look for an obese, white man, wearing a black hoodie, in denial about his own rapidly balding head, and carrying a bag of M&S cookies that he’s clearly going to eat all by himself… the software could easily narrow him down, whoever he is, – even on a busy station concourse.

And sure, there are going to be false positives – but the Ipsotek software takes this into account, letting operators manually look through tags, adding or removing hits from the timeline, and plotting the implied route automatically on a map.

I guess this video (which was posted by Ipsotek) also reveals the technology has been used in Kingston Upon Thames town centre.

Basically then, it’s very clever – not just because of what it does, but what it doesn’t do.

As though it is incredibly useful, as the station’s CCTV supervisor can follow, say, a suspected shoplifter in real time as they walk out of Boots with a suspiciously stuffed bag, it doesn’t… recognise faces.

This means that it isn’t possible to compare individuals caught by the system against a pre-existing database of terrorists, shoplifters, fare-evaders, or, say, anti-government protesters, so they can be apprehended or monitored the second they enter the station.

Perhaps I’m just a frog, sat in a pot on the stove, enjoying the gently warm water3, but this seems like a relatively elegant way of threading the needle between security and privacy.

However, it appears that even this specific appearance tracking technology was a little too hot for Network Rail4.

I can confirm it was tested during the year long trial at the station – see the screenshot up above, showing the tag and track software showing a map of London Bridge and clearly footage of a woman walking through the ‘Vaults’ shopping area of the station5.

But since the system has been rolled out, Network Rail has confirmed to me that “Tag and Track is not in use,” and for good measure added that “We do not use facial recognition or real-time live tracking.”

Sadly they didn’t elaborate on the reasons why they didn’t take it forward6.

Other ways London Bridge uses AI Cameras

Right, that’s the really spicy stuff out the way – but there’s also so much more to this technology.

As I describe above, video analytics is now routinely used at London Bridge. It really does seem incredibly useful if you have to manage a major railway station. As I say, Network Rail has declined to confirm or deny what other use case the AI cameras are being used for, but I can reveal some of other functionality that was tested. So here’s the highlights…

Keep reading with a 7-day free trial

Subscribe to Odds and Ends of History to keep reading this post and get 7 days of free access to the full post archives.